I am a research scientist at FAIR, Meta. I graduated with a PhD in Computer Science from UT Austin, advised by Dr. Kristen Grauman. My PhD research was at the intersection of video understanding and embodied AI.

Before coming to UT, I was an intern at MALL Lab, IISc, working with Dr. Partha Talukdar. I completed my B.E. in Computer Science from BITS Goa.

Contact: tushar.nagarajan@utexas.edu | tusharn@meta.com

CV: Link

Publications

|

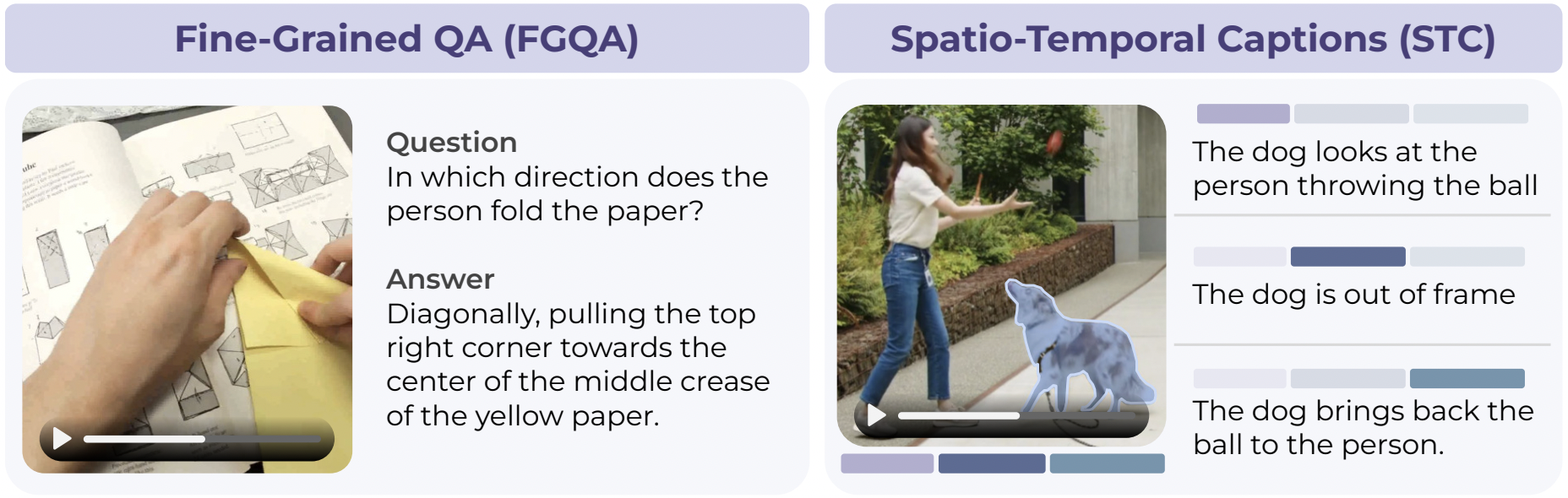

PerceptionLM: Open-Access Data and Models for Detailed Visual Understanding Jang Hyun Cho*, Andrea Madotto*, Effrosyni Mavroudi*, Triantafyllos Afouras*, Tushar Nagarajan*, Muhammad Maaz*, Yale Song*, Tengyu Ma*, Shuming Hu*, Suyog Jain, Miguel Martin, Huiyu Wang, Hanoona Rasheed, Peize Sun, Po-Yao Huang, Daniel Bolya, Nikhila Ravi, Shashank Jain, Tammy Stark, Shane Moon, Babak Damavandi, Vivian Lee, Andrew Westbury, Salman Khan, Philipp Krähenbühl, Piotr Dollár, Lorenzo Torresani, Kristen Grauman, Christoph Feichtenhofer NeurIPS 2025 (Spotlight) [paper] [code] |

|

Switch-a-View: Few-Shot View Selection Learned from Edited Videos Sagnik Majumder, Tushar Nagarajan, Ziad Al-Halah, Kristen Grauman ICCV 2025 [paper] [project] |

|

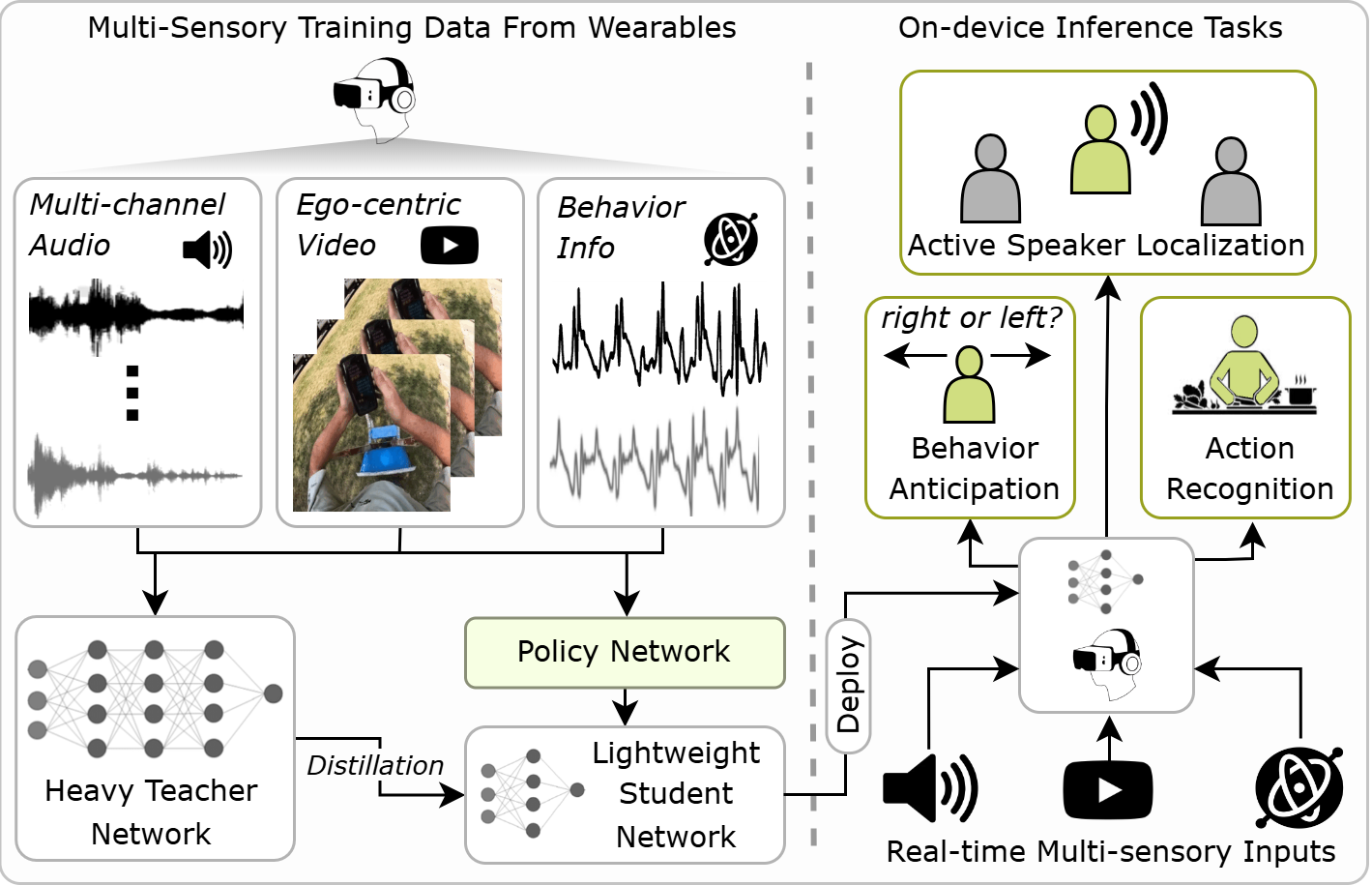

EgoAdapt: A Joint Distillation and Policy Learning Framework for Efficient Multisensory Egocentric Perception Sanjoy Chowdhury, Subrata Biswas, Sayan Nag, Tushar Nagarajan, Calvin Murdock, Ishwarya Ananthabhotla, Yijun Qian, Vamsi Krishna Ithapu, Dinesh Manocha, Ruohan Gao ICCV 2025 [paper] |

|

VITED: Video Temporal Evidence Distillation Yujie Lu, Yale Song, Lorenzo Torresani, William Wang, Tushar Nagarajan CVPR 2025 [paper] |

|

BIMBA: Selective-Scan Compression for Long-Range Video Question Answering Md Mohaiminul Islam, Tushar Nagarajan, Huiyu Wang, Gedas Bertasius, Lorenzo Torresani CVPR 2025 🏆 1st place: EgoSchema Challenge (CVPR 2025) [paper] [project] |

|

Which Viewpoint Shows it Best? Language for Weakly Supervising View Selection in Multi-view Instructional Videos Sagnik Majumder, Tushar Nagarajan, Ziad Al-Halah, Reina Pradhan, Kristen Grauman CVPR 2025 (Highlight) [paper] [project] |

|

ExpertAF: Expert Actionable Feedback from Video Kumar Ashutosh, Tushar Nagarajan, Georgios Pavlakos, Kris Kitani, Kristen Grauman CVPR 2025 [paper] [project] |

|

VEDIT: Latent Prediction Architecture For Procedural Video Representation Learning Han Lin, Tushar Nagarajan, Nicolas Ballas, Mido Assran, Mojtaba Komeili, Mohit Bansal, Koustuv Sinha ICLR 2025 [paper] |

|

User-in-the-loop Evaluation of Multimodal LLMs for Activity Assistance Mrinal Verghese, Brian Chen, Hamid Eghbalzadeh, Tushar Nagarajan, Ruta Desai WACV 2025 (Oral) [paper] |

|

AnyMAL: An Efficient and Scalable Any-Modality Augmented Language Model Seungwhan Moon*, Andrea Madotto*, Zhaojiang Lin*, Tushar Nagarajan*, Matt Smith, Shashank Jain, Chun-Fu Yeh, Prakash Murugesan, Peyman Heidari, Yue Liu, Kavya Srinet, Babak Damavandi, Anuj Kumar EMNLP 2024 (Industry Track) (* equal contribution) [paper] |

|

AMEGO: Active Memory from long EGOcentric videos Gabriele Goletto, Tushar Nagarajan, Giuseppe Averta, Dima Damen ECCV 2024 [paper] [project] |

|

Propose, Assess, Search: Harnessing LLMs for Goal-Oriented Planning in Instructional Videos Md Mohaiminul Islam, Tushar Nagarajan, Huiyu Wang, Fu-Jen Chu, Kris Kitani, Gedas Bertasius, Xitong Yang ECCV 2024 (Oral) [paper] [project] |

|

Step Differences in Instructional Video Tushar Nagarajan, Lorenzo Torresani CVPR 2024 [paper] [data/code] |

|

Detours for Navigating Instructional Videos Kumar Ashutosh, Zihui Xue, Tushar Nagarajan, Kristen Grauman CVPR 2024 (Highlight) [paper] [project] |

|

Video ReCap: Recursive Captioning of Hour-Long Videos Md Mohaiminul Islam, Ngan Ho, Xitong Yang, Tushar Nagarajan, Lorenzo Torresani, Gedas Bertasius CVPR 2024 🏆 EgoVis Distinguished Paper Award (2025) [paper] [code] |

|

Ego-Exo4D: Understanding Skilled Human Activity from First-and Third-Person Perspectives Kristen Grauman, Andrew Westbury, Lorenzo Torresani, Kris Kitani, Jitendra Malik, Tushar Nagarajan*, Triantafyllos Afouras*, Kumar Ashutosh*, Vijay Baiyya*, Siddhant Bansal*, Bikram Boote*, Eugene Byrne*, Zach Chavis*, Joya Chen*, Feng Cheng*, Fu-Jen Chu*, Sean Crane*, Avijit Dasgupta*, Jing Dong*, Maria Escobar*, Cristhian Forigua*, Abrham Gebreselasie*, Sanjay Haresh*, Jing Huang*, Md Mohaiminul Islam*, Suyog Jain*, Rawal Khirodkar*, Devansh Kukreja*, Kevin J Liang*, Jia-Wei Liu*, Sagnik Majumder*, Yongsen Mao*, Miguel Martin*, Effrosyni Mavroudi*, Francesco Ragusa*, Santhosh Kumar Ramakrishnan*, Luigi Seminara*, Arjun Somayazulu*, Yale Song*, Shan Su*, Zihui Xue*, Edward Zhang*, Jinxu Zhang, Angela Castillo, Changan Chen, Xinzhu Fu, Ryosuke Furuta, Cristina Gonzalez, Prince Gupta, Jiabo Hu, Yifei Huang, Yiming Huang, Weslie Khoo, Anush Kumar, Robert Kuo, Sach Lakhavani, Miao Liu, Mi Luo, Zhengyi Luo, Brighid Meredith, Austin Miller, Oluwatumininu Oguntola, Xiaqing Pan, Penny Peng, Shraman Pramanick, Merey Ramazanova, Fiona Ryan, Wei Shan, Kiran Somasundaram, Chenan Song, Audrey Southerland, Masatoshi Tateno, Huiyu Wang, Yuchen Wang, Takuma Yagi, Mingfei Yan, Xitong Yang, Zecheng Yu, Shengxin Cindy Zha, Chen Zhao, Ziwei Zhao, Zhifan Zhu, Jeff Zhuo, Pablo Arbelaez, Gedas Bertasius, David Crandall, Dima Damen, Jakob Engel, Giovanni Maria Farinella, Antonino Furnari, Bernard Ghanem, Judy Hoffman, C. V. Jawahar, Richard Newcombe, Hyun Soo Park, James M. Rehg, Yoichi Sato, Manolis Savva, Jianbo Shi, Mike Zheng Shou, Michael Wray CVPR 2024 (Oral) [paper] [project] |

|

HT-Step: Aligning Instructional Articles with How-To Videos Triantafyllos Afouras, Effrosyni Mavroudi, Tushar Nagarajan, Huiyu Wang, Lorenzo Torresani NeurIPS 2023 [paper] [data/code] |

|

Ego4D Goal-Step: Toward Hierarchical Understanding of Procedural Activities Yale Song, Eugene Byrne, Tushar Nagarajan, Huiyu Wang, Miguel Martin, Lorenzo Torresani NeurIPS 2023 🏆 EgoVis Distinguished Paper Award (2025) [paper] [code] |

|

EgoDistill: Egocentric Head Motion Distillation for Efficient Video Understanding Shuhan Tan, Tushar Nagarajan, Kristen Grauman NeurIPS 2023 [paper] [project] |

|

EgoEnv: Human-centric environment representations from egocentric video Tushar Nagarajan, Santhosh K. Ramakrishnan, Ruta Desai, James Hillis, Kristen Grauman NeurIPS 2023 (Oral) [paper] [project] [code] |

|

Ego4d: Around the world in 3,000 hours of egocentric video Kristen Grauman, Andrew Westbury, Tushar Nagarajan*, Eugene Byrne*, Zachary Chavis*, Antonino Furnari*, Rohit Girdhar*, Jackson Hamburger*, Hao Jiang*, Miao Liu*, Xingyu Liu*, Miguel Martin*, Ilija Radosavovic*, Santhosh Kumar Ramakrishnan*, Fiona Ryan*, Jayant Sharma*, Michael Wray*, Mengmeng Xu*, Eric Zhongcong Xu*, Chen Zhao*, Siddhant Bansal, Dhruv Batra, Vincent Cartillier, Sean Crane, Tien Do, Morrie Doulaty, Akshay Erapalli, Christoph Feichtenhofer, Adriano Fragomeni, Qichen Fu, Abrham Gebreselasie, Cristina Gonzalez, James Hillis, Xuhua Huang, Yifei Huang, Wenqi Jia, Weslie Khoo, Jachym Kolar, Satwik Kottur, Anurag Kumar, Federico Landini, Chao Li, Yanghao Li, Zhenqiang Li, Karttikeya Mangalam, Raghava Modhugu, Jonathan Munro, Tullie Murrell, Takumi Nishiyasu, Will Price, Paola Ruiz Puentes, Merey Ramazanova, Leda Sari, Kiran Somasundaram, Audrey Southerland, Yusuke Sugano, Ruijie Tao, Minh Vo, Yuchen Wang, Xindi Wu, Takuma Yagi, Ziwei Zhao, Yunyi Zhu, Pablo Arbelaez, David Crandall, Dima Damen, Giovanni Maria Farinella, Christian Fuegen, Bernard Ghanem, Vamsi Krishna Ithapu, C. V. Jawahar, Hanbyul Joo, Kris Kitani, Haizhou Li, Richard Newcombe, Aude Oliva, Hyun Soo Park, James M. Rehg, Yoichi Sato, Jianbo Shi, Mike Zheng Shou, Antonio Torralba, Lorenzo Torresani, Mingfei Yan, Jitendra Malik CVPR 2022 (Oral) TPAMI 2023 Invited article: Best Papers of CVPR [paper] [project] |

|

Environment Predictive Coding for Visual Navigation Santhosh K. Ramakrishnan, Tushar Nagarajan, Ziad Al-Halah, Kristen Grauman ICLR 2022 [paper] [project] [code] |

|

Shaping embodied agent behavior with activity-context priors from egocentric video Tushar Nagarajan, Kristen Grauman NeurIPS 2021 (Spotlight) [paper] [project] [talk] |

|

Ego-Exo: Transferring Visual Representations from Third-person to First-person Videos Yanghao Li, Tushar Nagarajan, Bo Xiong, Kristen Grauman CVPR 2021 [paper] [code] |

|

Differentiable Causal Discovery Under Unmeasured Confounding Rohit Bhattacharya, Tushar Nagarajan, Daniel Malinsky, Ilya Shpitser AISTATS 2021 [paper] [code] |

|

Learning Affordance Landscapes for Interaction Exploration in 3D Environments Tushar Nagarajan, Kristen Grauman NeurIPS 2020 (Spotlight) [paper] [project] [talk] [code] |

|

Ego-Topo: Environment Affordances from Egocentric Video Tushar Nagarajan, Yanghao Li, Christoph Feichtenhofer, Kristen Grauman CVPR 2020 (Oral) [paper] [project] [talk] [code] |

|

Grounded Human-Object Interaction Hotspots from Video Tushar Nagarajan, Christoph Feichtenhofer, Kristen Grauman ICCV 2019 [paper] [project] [code] |

|

Attributes as Operators: Factorizing Unseen Attribute-Object Compositions Tushar Nagarajan, Kristen Grauman ECCV 2018 [paper] [code] |

|

BlockDrop: Dynamic Inference Paths in Residual Networks Zuxuan Wu*, Tushar Nagarajan*, Abhishek Kumar, Steven Rennie, Larry S. Davis, Kristen Grauman, Rogerio Feris CVPR 2018 (Spotlight) (* equal contribution) [paper] [code] [talk] |

|

CANDiS: Coupled & Attention-Driven Neural Distant Supervision Tushar Nagarajan, Sharmistha Jat, Partha Talukdar ACL 2017 (Workshop) [paper] |

|

Computational antimicrobial peptide design and evaluation against multidrug-resistant clinical isolates of bacteria Deepesh Nagarajan, Tushar Nagarajan, Natasha Roy, Omkar Kulkarni, Sathyabaarathi Ravichandran, Madhulika Mishra, Dipshikha Chakravortty, Nagasuma Chandra JBC 2018 [paper] [code] |